In the 1930s, Alan Turing (dramatized by Benedict Cumberbach in The Imitation Game), imagined a universal computing machine which could store both its data, and its programs in memory. 1940s-era computers, such as the ENIAC, had no programs as such. Instead, they were hard-wired to perform specific calculations. Programming them entailed rewiring their circuit cabinets, a process that could take several days.

Turing's ideas were realized in 1946, when mathematician and physicist John Von Neumann described the first real stored program computer system, the EDVAC, whose machine language instructions were stored in memory as a binary numeric code.

To run a program on the EVAC, each instruction was fetched from memory by the CPU Control Unit (CU) and then stored in registers on the CPU. The instruction was then decoded and executed by the CPU's Arithmetic/Logic unit (ALU). Finally, the results were written back into memory where they could be examined. This sequence-read, decode, execute and store-is known as the instruction cycle; it's how every computer works.

Here's an example. The Intel CPU instruction which copies a value from memory

into the CX register is 8B4E06. We humans see this as a

hexadecimal (base 16) number. The computer, on the other

hand, is a digital electronic device, which doesn't know about numbers at all; it is built using integrated circuits (or transistorized switches), each of which is either on or off. We humans interpret the "on"

state as a 1 and the off state as a 0.

Here's an example. The Intel CPU instruction which copies a value from memory

into the CX register is 8B4E06. We humans see this as a

hexadecimal (base 16) number. The computer, on the other

hand, is a digital electronic device, which doesn't know about numbers at all; it is built using integrated circuits (or transistorized switches), each of which is either on or off. We humans interpret the "on"

state as a 1 and the off state as a 0.

So, how does the computer "know" what to do with the instruction 8B4E06? It doesn't! In binary this instruction is 100010110100111000000110, but inside the hardware, it is simply a block of switches. Electricity flows through each 1 to another part of the device. The flow of electricity is blocked by a 0.

As a physical analogy, imagine the

player-piano roll, where a hole in the paper causes a note to be played, allowing a hammer to

strike a particular string.

Machine code is also called native code, since the computer can use it without any translation. Machine language programs are difficult to understand and, inherently non-portable, since they are designed for a single type of CPU.

Yet, high-performance programs are still written in machine language (or its symbolic form, assembly language ). You can examine the native code for the APPLE II Disk Operating System, written by Steve Wozniak, at the Computer History Museum.

Machine and assembly language are low-level languages, tied to a specific CPU's instruction set. In the mid-1950s, John Backus lead a team at IBM which developed the first high-level programming language. FORTRAN (or the FORmula TRANslator), allowed scientists and engineers to write their own programs.

COBOL (the COmmon Business Oriented Language), allowed accountants and bankers to write programs using a vocabulary with which they were comfortable. In 1958, John McCarthy at MIT built a third high-level language named LISP (the LISt Processing Language) to help him with his research into artificial intelligence.

These three high-level languages allowed non-computer specialists to write their own programs, but they were not general-purpose languages; scientists could not use COBOL to write code for NASA, and accountants could not use FORTRAN.

In 1960, the designers of FORTRAN, COBOL and LISP gathered together in Paris

to remedy that, producing the

AlgorithmicLanguage (ALGOL). Modern languages derive much of their syntax and many core concepts from

ALGOL.

Many new languages followed rapidly in the next seven decades. Here are two:

- BASIC, the Beginner's All-purpose Symbolic Instruction Code, was developed at Dartmouth University in 1964. The professors Kemeny and Kurtz wanted to teach programming to university students using the new, interactive, time-share computers. BASIC was later the first language available on micro-computers, implemented by Bill Gates for the Altair. Even later, in the 1990s, Microsoft introduced Visual Basic, a graphical version, popular in the business world.

- In 1972, the Swiss computer scientist Nicholas Wirth felt that BASIC was teaching students bad programming habits, so he created the popular teaching language Pascal, (named after the philosopher, Blaise Pascal). Strongly influenced by Algol, Pascal enforced structured programming techniques. It was the first programming language taught in most university computing programs until about 2000. Wirth later designed Modula, Oberon, and Ada, a language still in wide use in avionics and in defense.

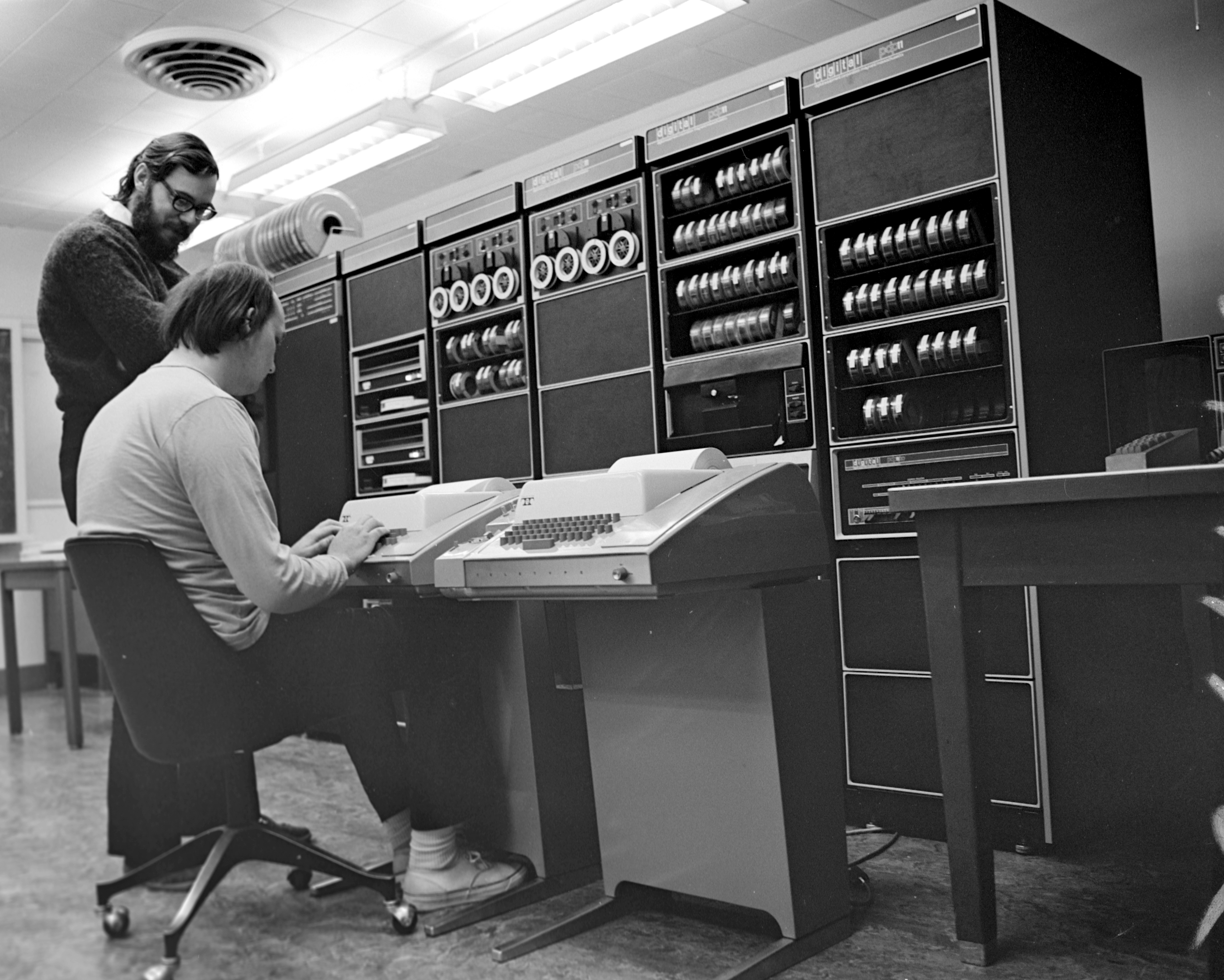

In 1970, two researchers at Bell Labs in New Jersey (Ken Thompson and Dennis Ritchie) developed a portable operating system for the new wave of mini-computers just coming on the market. They named their operating system Unix (or UNIX if you like).

Unix was originally written in assembly language. Later was converted, (or ported), to a high-level language named B. Then, to simplify the development of Unix, Dennis Ritchie modified the B language and created the programming language named C.

Both Unix and C have had enormous impacts on the field of computing. Many of today's most important language are based on C, including C++, Java and C#, which still use much of the original syntax designed by Ritchie.

C and Pascal are imperative languages, where the emphasis is on the actions that the computer should do. In the procedural paradigm, (or programming style), programs consist of a hierarchy of subprograms (procedures or functions) which process external data.

Object-oriented programming, or OOP, is a different style of programming. In the OOP paradigm, programs are communities of somewhat self-contained components (called objects) in which data and actions are combined.

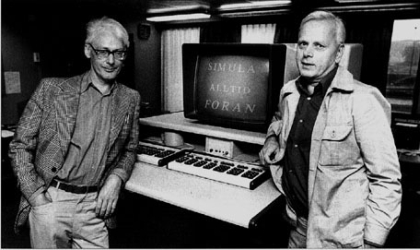

The first object-oriented language was SIMULA, a simulation language designed by the Scandinavian computer scientists Ole-Johan Dahl and Kristen Nygaard in 1967. Much of the terminology we use today to describe object-oriented languages comes from their original 1967 report.

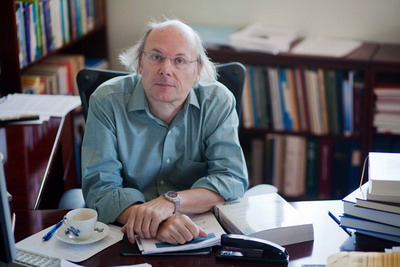

A Danish Ph.D. student at Cambridge University,

Bjarne Stroustrup, was heavily influenced by

SIMULA. In 1980, working as a researcher at AT&T Bell Labs, he

began adding object-oriented features to C. This first version of

what would later become C++, was named

C with Classes.

Unlike idealistic languages that enforce "one true path" to programming Utopia, C++ is pragmatic. C++ is designed as a multi-paradigm language.

In C++ the object-oriented and the procedural programming styles are complementary, since you may find a use each approach. So, consider each paradigm or style a tool that you can pull out of your toolbox when needed, to apply the conceptual model that is most appropriate for the task at hand.